Averaging Rainfall

Averaging Annual Rainfall

Network Common Data Form (netCDF)

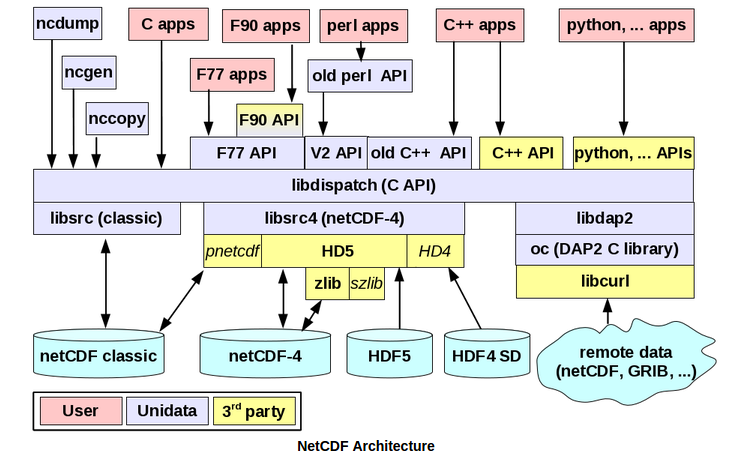

NetCDF is a set of software libraryies and machine-independent data formats that support the creation, access, and sharing of array-oriented scientific data. NetCDF data is self-describing, multi-dimensional and multivariate.

NetCDF is a set of software libraries and describing, machine-independent data formats that support the creation, access, and sharing of array-oriented scientific data. NetCDF was developed at Unidata. Unidata provides data and software tools for use in geoscience education and research. Unidata is part of the University Corporation for Atmospheric Research(UCAR) Community Programs(UCP).1

NetCDF data is accessible via a series of language APIs: C, C++, Fortran77/90, Java, NCL, Objective-C, Perl, Python, R, Tcl/Tk, and Visual Basic

NetCDF data is also accessible via a series of applications including: Panoply, nco, IDV, GrADS, FERRET and various others. For the full listing of applications, look here..

With the help of a THREDDS Data Server, large netCDF datasets are easily accessible and discoverable through a client application or through HTTP.

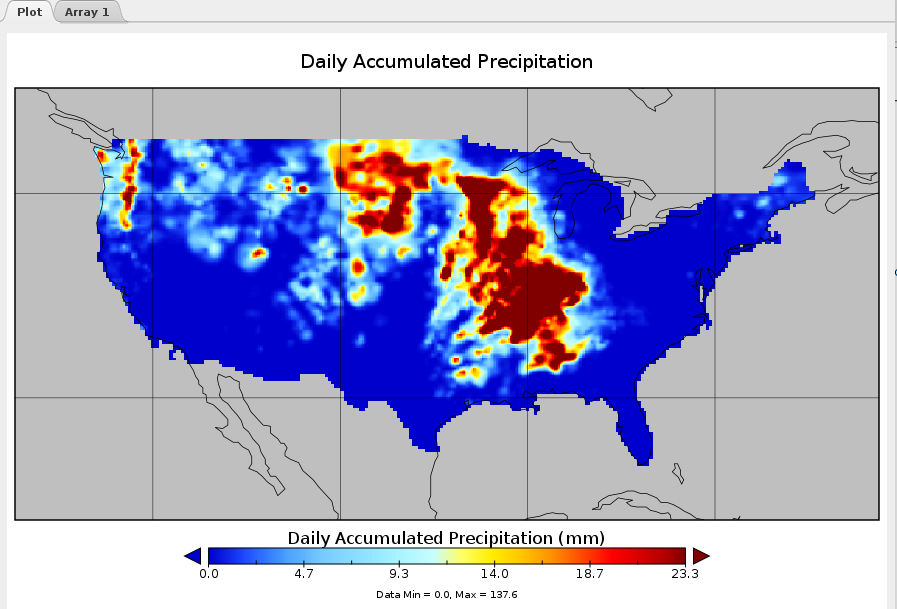

The multidimensional nature of netCDF data makes storage of complex data such as temperature and humidity simple. Also, since netCDF allows for multivariate storage, several variables can be subsets, ensembled and explored simultaneously. An issue can be the process of creating a usable dataset from netCDF data. This can be due to the sensor-based nature of netCDF, which is a primary dataset (i.e. daily precipitation sums). In order to get the proper dataset, it often requires researchers to subset, and ensemble values over a dimension. Ensembled data sets could be seasonal datasets, climatologies, or long-term averages.

Overview

Procedure

For our case study, we created an annual average for precipitation between 2007-2014.

We had to first locate the datasets from http://esrl.noaa.gov/psd/thredds/dodsC/Datasets/cpc_us_precip/RT

This brought us 9 years of datasets.

Subsetting data

These datasets were then subsetted to our bounding box with the following command:

netCDF Kitchen Sink

The nickname ‘kitchen sink’ is a catchal-all because ncks combines most features of ncdump and nccopy with extra features to extract, hyperslab,multi-slab, and translate into one versatile utility. ncks extracts hayperslabs from specified variables using the option ‘-d’2. This hyperslab feature allows us to specify the subsets for the latitude and longitude dimensions.

ncks -O -d lon,234.0,244.0 -d lat,44.0,50.0 ${URL}.${year}.nc subset.${year}.ncIn the above command, we set the longitude and latitude dimensions to reflect the extent of Washington state. The ‘-O’ option specifies that the command will overwrite the output file if it already exists, which is helpful for script testing.

Removing bad values

If you look at the metadata for the subsetted file, you can see that the ‘precip’ variable is a float data type. Instead of using null values in their datasets, netCDF uses designated fill values based on the variable data type. The fill value for floats comes in as 9.9692e+36 or ~(15 * 2^119). This value is important since all bad values will be assigned this values. This includes raster cells above the ocean since the data set only covers land-based precipitation data. Since our dataset has a large resolution at .25° x .25°(approximately 27.83km x 27.83km), we need to resample our grid to match the other outputs at 1kmx1km. Interpolating between Null values can cause more values to become than necessary, so we will convert the null values to 0 prior to interpolating the data. Setting null values to zero has the benefit of keeping interpolated values as actual numbers rather than nulls. We will be using the netCDF Arithmetic Processor from the NCO utility library.

netCDF Arithmetic Processor

The netCDF Arithmetic Processor(ncap2) was written to perform arbitrary algebraic transformation of data and archive the results as easily as possible3. Instructions for ncap2 can be contained in an MCP script file or delivered inline with the ‘-s’ flag. One of ncap2’s most powerful features is its ability to define new variables in terms of existing variables.

Overview

Panoply The ncap2 command we will be using is:

ncap2 -O -s 'where(precip < 0.0 || precip > 10000) precip=0;' subset.${year}.nc narm.${year}.ncIn the above command, we use the inline script flag ‘-s’ to specify that the cell value should be set to 0 if it is less than 0 or greater than 10,000. Once again, we include the overwrite flag ‘-O’ for scripting purposes.

Summing yearly precipitation

Since our analysis is looking at patterns of precipitation over time, rather than the amount of rain accumulated during a particular day, we will have create an ensemble dataset with the sum of precipitation for a given year. We will be using the NetCDF Record Averager to sum our precipitation values over the time dimension.

netCDF Record Averager

The netCDF Record Averager averages record variables across an abitrary number of input-files. ncra will apply a given operation to the all the cell values across a given dimension.2

The ncra command we will be using is:

ncra -O --op-typ=ttl -d time,,,1 -v precip narm.${year}.nc annual.${year}.ncIn the above command, the ‘-v precip’ flag defines the variable ncra will operate on. The ‘–op-type=ttl’ flag indicates that we want ncra to calculate the sum of records while the ‘-d’ flag indicates that we are summing the values across the time dimension. Once again we include the overwrite flag ‘-O’ for scripting purposes.

Regridding netCDF files

The next step we will take is to scale our precipitation raster to match the resolution and extent of the other inputs. There is no turn-key solution for regridding netCDF datasets, so we had to create a script from some existing NCL examples.

NCAR Command Language

The NCAR Command Language (NCL), a product of the Computational and Information Systems Laboratory at the National Center for Atmospheric Research(NCAR) and sponsored by the National Science Foundation, is a free interpreted language designed specifically for data analysis and visualization. NCL supports netCDF 3/4, GRIB 1/2, HDF 4/5, HDF-EOS 2/5, shapefile, ASCII and binary formats4. NCL gives users fine-grained access to netCDF datasets, their dimensions, variable and attributes. Another benefit of NCL is the amount of working examples available online and the quality of language documentation. The NCL variable model is based on the netCDF variable model which makes handling netCDF data naturally.

The script we used for the regridding can be found in the Resources page.

Averaging annual values

Once we have regridded the data, we have to create an average of the annual precipitation totals. We will use the netCDF Ensemble Statistics(nces)Utility to create the average annual dataset.

netCDF Ensemble Statistics

netCDF Ensemble Statistics performs gridpoint statistics on variables across an arbitray number(an ensemble) of input files and/or input groups within each file. nces is distinct from ncra which performs statistics only over the record dimension(s)(e.g. time) and weights each record in each record dimension evenly2

The nces command we will be using is:

nces -O -n 8,2,1 ${OUTPUTDIR}/regrid.2007.nc avg_annual.ncThe above command uses the loop flag ‘-n 8,2,1’ which uses the following syntax -n file_number,digit_number,numeric_increment where file_number is the number of files, digit_number is the fixed number of numeric digits comprising the numeric_suffix and numeric_increment is the constant, integer-valued difference between the numeric_suffix of any two files5.

Converting to GeoTiff

In order for us to combine our new averaged annual precipitation layer with our other landslide susceptibility inputs, we will have to define the lat/lon extent of our data and project our data into EPSG:2927. This can be done using a simple R script. Our R Script will require a few libraries

library(raster)

library(ncdf4)

library(rgdal)Once our libraries have loaded we can set the input directory to the folder holding our precipitation layer and load it as a raster.

setwd('path/to/files')

r <- raster('avg_annual.nc')

#get netCDF extent

oldExt <- extent(r)

# set new netCDF extent by subtracting 360 from X coordinates

xMin <- oldExt[1] - 360

xMax <- oldExt[2] - 360

yMin <- oldExt[3]

yMax <- oldExt[4]

# create extent object

geoExt <- extent(xMin, xMax, yMin, yMax)

r <- setExtent(r, geoExt, keepres=TRUE)Now that the extent is properly set, we will set the new projection and projected extent information

newProj <- '+proj=lcc +lat_1=47.33333333333334 +lat_2=45.83333333333334 +lat_0=45.33333333333334 +lon_0=-120.5 +x_0=500000.0001016001 +y_0=0 +ellps=GRS80 +to_meter=0.3048006096012192 +no_defs'

xMinPrj <- 548554.658

xMaxPrj <- 2558498.351

yMinPrj <- 77271.774

yMaxPrj <- 1366159.434

xRes <- 1000

yRes <- 1000

newRaster <- raster(xmn=xMinPrj, xmx=xMaxPrj, ymn=yMinPrj, ymx=yMaxPrj, crs=newProj, res=1000)

newR <- projectRaster(r, newRaster)

print("Finished projecting raster")

summary(newR)

fileout <- "testRout.tif"

print(paste0("Writing new Raster to ", fileout))

writeRaster(newR, filename=fileout, format="GTiff", options=c("COMPRESS=ZIP"), overwrite=TRUE)

print("Finished outputting raster. Exiting...")-

https://www.unidata.ucar.edu/software/netcdf/docs ↩

-

http://stderr.org/doc/nco/html/ncap2-netCDF-Arithmetic-Processor.html ↩

-

In the following command, we set the longitude and latitude dimensions to reflect the extent of Washington state. The ‘-O’ option specifies that the command will overwrite the output file if it already exists, which is helpful for script testingo5]: The NCAR Command Language (Version 6.In the following command, we set the longitude and latitude dimensions to reflect the extent of Washington state. The ‘-O’ option specifies that the command will overwrite the output file if it already exists, which is helpful for script testingo2.1) [Software]. (2014). Boulder, Colorado: UCAR/NCAR/CISL/VETS. http://dx.doi.org/10.5065/D6WD3XH5 ↩

-

http://nco.sourceforge.net/nco.html#Specifying-Input-Files ↩